Automatic Speech Recognition, also known as ASR or Voice Recognition, is a term you've heard a lot in recent years. In a sentence,

ASR is series of technologies used to automatically process audio data (phone calls, voice searches on your phone, podcasts, etc.) into a format computers can understand. Often readable text, it is a necessary first step to figuring out what cool information is hiding in voice recordings.

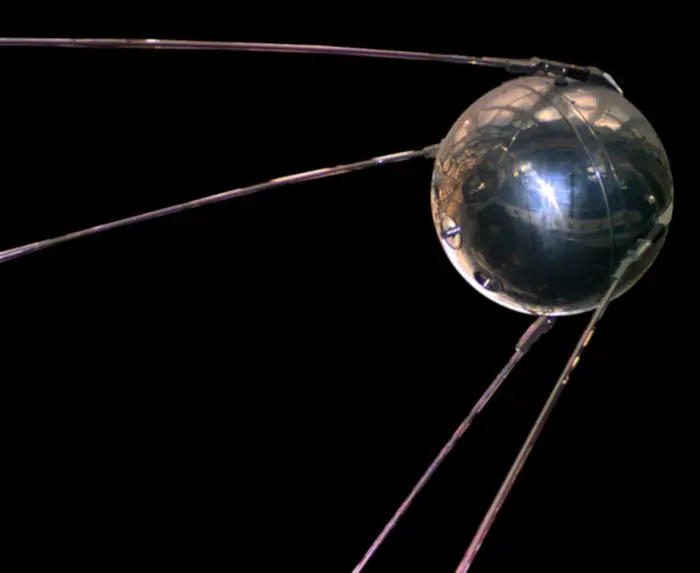

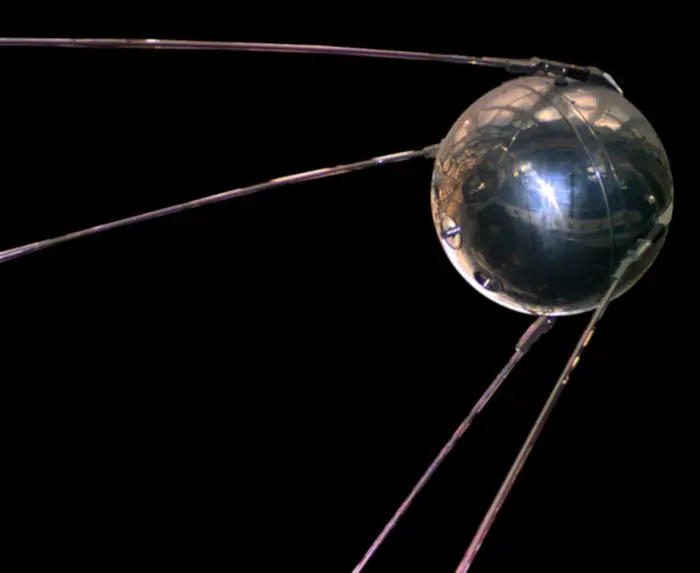

Since its fledgeling beginnings in the 1950s, ASR has come a long way-once an ARPA (DARPA) project in the 70's, then a frustrating dictation product in the 90's to a buzzword today.

News of the successful launch of the first artificial satellite Sputnik by the USSR (1957) spurred President Eisenhower to create ARPA (Advanced Research Projects Agency) in 1958. This agency is responsible for the creation of some minor 20th century inventions such as the computer mouse and the Internet, as well as every Tom Clancy plot ever.

Since the advent of products like Siri, Alexa, and Google Home there has been a lot of excitement around the term. This is because ASR technologies are the driving force behind a wide array of modern technologies. If you have a smartphone -66% of living humans do (and at least one Rhesus monkey), then you have ASR technologies at your fingertips. And it's used by 83% of business to transcribe speech, according to respondents in The State of Voice 2022. However, like so many oft-thrown-about terms, ASR seems to remain a nebulous term.

What is ASR really?

What does ASR do?

How can I use ASR?

What are the limitations of ASR?

Why should I care about it?

Our goal in this and subsequent articles is to answer some of these questions and, perhaps, ask a few questions of our own.

What is ASR?

Well, as you may have gathered from the first sentence above, ASR stands for Automatic Speech Recognition. The purpose of ASR is to convert audio data into data formats that data scientists use to get actionable insights for business, industry and academia. Most often, that data format is a readable transcript. Sounds simple, and in principle it is. Let's unpack the three words behind ASR so we can make more sense of what is going on:

The term automatic makes reference that after a certain point, machines are doing some human task without any human intervention. Speech data in, machine-readable data out.

The term speech tells us that we are working with audio data -technically any audio data. These include noisy customer recordings from an angry customer calling from a 16 lane highway in Los Angeles, to super-crisp, extra bass-y podcast audio.

The term recognition tells us that our goal here is to convert audio into a format that computers can understand (often a text a transcript). In order to do neat things with audio data, such trigger a command to buy something online (think: OK Google) or figure out what sort of phone sales interactions lead to better sales numbers, you need to convert audio data into a parsable data format for machines (and humans) to analyze.

However, not all ASR is made equal. There are many approaches to converting speech into text-some better than others....

3 Ways to Do Automatic Speech Recognition

There are essentially three ways to do automatic speech recognition:

The old way

The Frankenstein approach

The new way (End-to-End Deep Learning)

ASR technologies began development in the 1950 and 1960s, when researchers made hard-wired (vacuum tubes, resistors, transistors and solder) systems that could recognize individual words -not sentences or phrases. That technology, as you might imagine, is essentially obsolete (I do suspect, however, that those people who are into vacuum tube amps, might still be into it).