A subfield within artificial intelligence that concentrates on equipping machines with the capability to interpret, infer, and respond to human language inputs, often emphasizing semantics, context, and intent beyond mere syntax.

In the realm of artificial intelligence, the ability for machines to grasp and generate human language is a domain rife with intrigue and challenges. At its heart lies Natural Language Understanding (NLU). To clarify, while ‘language processing’ might evoke images of text going through some form of computational mill, ‘understanding’ hints at a deeper level of comprehension.

NLU is, essentially, the subfield of AI that focuses on the interpretation of human language. It goes beyond mere recognition of words or parsing sentences. NLU endeavors to fathom the nuances, the sentiments, the intents, and the many layers of meaning that our language holds. It’s about the why, not just the what.

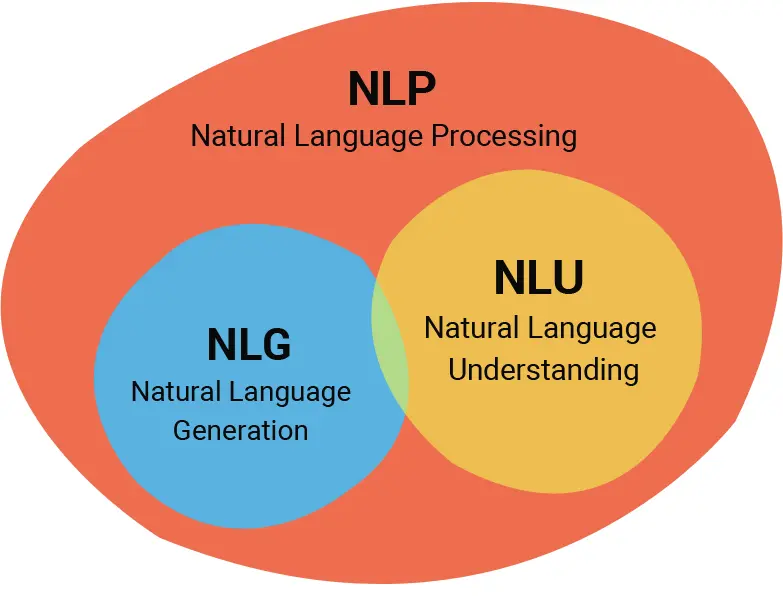

Contrast this with Natural Language Processing (NLP), a broader domain that encompasses a range of tasks involving human language and computation. While NLU is concerned with comprehension, NLP covers the entire gamut, from tokenizing sentences (breaking them down into individual words or phrases) to generating new text. Think of NLP as the vast ocean, with NLU as a deep and complex trench within it.

By aismartz, CC BY-SA 4.0

The quest to arm machines with the ability to understand our language is of immense importance. Imagine the possibilities: machines that can provide context-aware responses, tools that can decipher intricate instructions, or software that truly gets user feedback without any explicit programming. Yet, this endeavor isn’t without its challenges. The intricacies of human language, filled with idioms, metaphors, cultural contexts, and ambiguities, present a vast puzzle for AI.

In this exploration, we’ll delve deeper into the nuances of NLU, tracing its evolution, understanding its core components, and recognizing its potential and pitfalls.

Historical Context

The journey of NLU has been both fascinating and complex. Let’s wind back the clock and understand its beginnings and the pivotal shifts that have occurred over the years.

Early Birds in the NLU Space:

Eliza: Developed in the mid-1960s at the MIT Artificial Intelligence Laboratory, Eliza was one of the first attempts to create a program that could emulate human conversation. Designed by Joseph Weizenbaum, it mimicked a Rogerian psychotherapist, often rephrasing users’ statements as questions. While rudimentary, Eliza showcased the allure of human-computer interaction through language.

SHRDLU: This program, crafted by Terry Winograd in the early 1970s, operated in a virtual “block world”. It could understand and act on user commands about this environment, manipulating geometric blocks based on the directives. SHRDLU was an early example of a system demonstrating a more nuanced understanding of language structure and intent.

The Algorithmic Timeline:

Rule-based Systems: For many years, NLU heavily leaned on hand-crafted, rule-based systems. Linguists and experts would feed machines a web of linguistic rules, helping them make sense of language. While effective for constrained domains, they struggled to scale or handle the vast complexities and exceptions in natural languages.

Statistical Methods: With the advent of more computational power and larger datasets, the 1990s and early 2000s saw a shift towards statistical models. These methods, rather than relying on rigid rules, looked for patterns in vast amounts of text. The strength here was in numbers — the more data fed, the better the understanding.

Deep Learning: Post-2010, neural networks and deep learning began to dominate the NLU scene. With architectures like RNNs, LSTMs, and later transformers, these models could capture nuances and contexts in language with unprecedented depth. Training on gargantuan datasets, they’ve set new standards in NLU benchmarks.

This history underscores the continually evolving nature of NLU. From humble, rule-based beginnings to the might of neural behemoths, our approach to understanding language through machines has been a testament to both human ingenuity and persistent curiosity.

Core Components of NLU

Understanding human language isn’t merely about recognizing words and their arrangements. It’s about grasping the structure, meaning, and the context in which words are used. This is accomplished through several fundamental components:

Syntax

Sentence Parsing: At its core, sentence parsing is about breaking down a sentence into its constituent parts to discern its structure. This helps in determining relationships between words and how they come together to convey meaning. For instance, in the sentence “The cat chased the mouse”, parsing would help identify “cat” as the subject and “chased” as the verb.

Part-of-speech Tagging: Every word has a role to play. Whether it’s a noun, verb, adjective, or adverb can change the meaning of a sentence significantly. Part-of-speech tagging is the process of labeling words based on their function in a sentence. It’s foundational to many NLU tasks, ensuring that “book” as a noun in “I read a book” is distinguished from “book” as a verb in “Please book a ticket”.

Semantics

Word Embeddings and Vector Space Models: While words are symbols for humans, machines understand numbers. Word embeddings translate words into vectors of numbers such that semantically similar words have similar vectors. This numeric representation, often using models like Word2Vec or GloVe, enables machines to grasp the meaning and relationships between words.

Semantic Role Labeling: This digs deeper into the roles that different parts of a sentence play in relation to the main verb. For instance, in “Jenny gave a book to Sam”, semantic role labeling identifies “Jenny” as the giver, “book” as the thing being given, and “Sam” as the receiver.

Pragmatics

Contextual Understanding: Words don’t exist in isolation. Their meaning can shift based on the context in which they’re used. A word like “bank” can mean a financial institution or the side of a river, depending on the surrounding words. Grasping this context is crucial for true language understanding.

Discourse Coherence: Beyond individual sentences, NLU also seeks to understand the coherence and structure of longer discourses. This involves grasping how different sentences relate to and build upon each other in a conversation or a narrative.

In essence, these components act as the pillars of NLU. They enable machines to approach human language with a depth and nuance that goes beyond mere word recognition, making meaningful interactions and applications possible.

Deep Learning in NLU

As the digital landscape has evolved, so too has our approach to Natural Language Understanding. Among the most transformative shifts has been the integration of deep learning. Here’s how it has reshaped NLU:

Rise of Neural Networks and Their Impact

Neural Beginnings: Previously, many NLU systems relied heavily on handcrafted rules or statistical methods. However, the advent of neural networks marked a paradigm shift. These networks, inspired by the human brain’s structure, could automatically learn features from vast amounts of data without being explicitly programmed for them.

Efficiency and Efficacy: Neural networks, especially deep neural networks with multiple layers, allowed for the capture of intricate patterns in data. In the realm of NLU, this translated to a more refined understanding of language nuances, contexts, and idiosyncrasies. Tasks that were previously challenging, like sarcasm detection or sentiment analysis, became more approachable.

Transformer Architectures: BERT, GPT, and Beyond

Transformers’ Arrival: The transformer architecture, introduced in the paper “Attention is All You Need”, revolutionized NLU. It incorporated a mechanism called ‘attention’, allowing models to focus on specific parts of the input data, akin to how humans pay attention to particular words or phrases when comprehending text.

BERT (Bidirectional Encoder Representations from Transformers): Developed by Google, BERT was groundbreaking because of its bidirectional understanding of text. Instead of reading text in just one direction (left-to-right or right-to-left), BERT considers both, leading to a more holistic understanding.

GPT (Generative Pre-trained Transformer): OpenAI’s GPT models, especially the later versions, are not just remarkable for understanding but also for generating human-like text. Pre-trained on vast corpora, these models can be fine-tuned for specific tasks, setting new standards in various NLU benchmarks.

Challenges in the Deep Learning Era

Interpretability: One significant hurdle with deep neural models, including transformers, is their ‘black box’ nature. It’s often challenging to pinpoint why they make specific decisions, which can be crucial in applications where transparency is essential.

Bias: Models learn from data. If this data has biases, which often it does, the models inherit them. This has led to instances where NLU models display unintended and sometimes harmful biases, raising ethical concerns.

Computational Costs: Training state-of-the-art models requires immense computational power and resources. This not only has environmental implications but can also limit access to advanced NLU technologies to entities with significant resources.

Deep learning’s impact on NLU has been monumental, bringing about capabilities previously thought to be decades away. However, as with any technology, it’s accompanied by its set of challenges that the research community continues to address.

Practical Applications of NLU

The advancements in Natural Language Understanding haven’t just been academic exercises. They’ve paved the way for a multitude of real-world applications that touch our daily lives in subtle and profound ways. Let’s unpack some of the prominent areas where NLU has made its mark:

Chatbots and Virtual Assistants

Automated Interactions: With the rise of digital platforms, businesses sought ways to interact with customers 24/7. Enter chatbots — automated systems that can handle queries, complaints, or even just casual banter. Through NLU, these bots understand user intent and respond coherently, making them indispensable for customer support, sales, and more.

Siri, Alexa, and Friends: NLU is the brain behind your favorite voice assistants. Whether you’re asking Siri for the weather, Alexa to play a song, or Google Assistant for a recipe, it’s NLU at play, decoding your requests and serving up apt responses or actions.

Sentiment Analysis and Social Listening

Pulse of the Public: Brands and businesses thrive on feedback. Sentiment analysis leverages NLU to sift through heaps of social media posts, reviews, or comments, gauging the overall sentiment — positive, negative, or neutral. It’s like having a finger on the pulse of public opinion in real-time.

Trend Spotting: Beyond mere sentiment, NLU-driven social listening tools can identify emerging trends, shifts in brand perception, and even potential PR crises by deeply understanding the context and nuances of public discourse.

Information Retrieval and Recommendation Systems

Search Engines’ Smartness: Ever wondered how Google seems to know what you’re looking for, even with a vaguely worded query? It’s NLU working behind the scenes, ensuring search results are relevant not just to your words, but to your intent.

Personalized Content: The reason streaming platforms like Netflix or Spotify seem to ‘get’ your tastes? NLU-backed recommendation systems. They analyze your interactions, preferences, and even the semantics of content to serve up suggestions that resonate.

In essence, NLU, once a distant dream of the AI community, now influences myriad aspects of our digital interactions. From the movies we watch to the customer support we receive — it’s an invisible hand, guiding and enhancing our experiences.

Tools and Frameworks

The surge in interest and applications of Natural Language Understanding has been supported by a vast ecosystem of tools and frameworks. These resources, often open-source, empower developers, researchers, and businesses to harness the power of NLU without reinventing the wheel. Here’s a brief look at some of the front-runners:

Popular NLU Libraries

spaCy: A favorite among many professionals, spaCy is an open-source library for advanced natural language processing in Python. It’s designed to be fast and efficient, offering pre-trained word vectors, tokenization, part-of-speech tagging, named entity recognition, and more. Plus, it’s built to seamlessly integrate with deep learning frameworks like TensorFlow and PyTorch.

NLTK (Natural Language Toolkit): A comprehensive library for Python, NLTK has been a staple for those starting in NLP and NLU. It offers easy-to-use interfaces for over 50 corpora and lexical resources, along with utilities for text processing, classification, tokenization, stemming, and tagging.

Platforms Tailored for NLU Tasks

Rasa: An open-source machine learning framework designed explicitly for developing conversational AI, like chatbots and voice apps. Rasa’s unique aspect is its ability to allow developers to build context-aware bots that can handle multi-turn conversations, all while ensuring data privacy since it can be deployed on-premise.

Dialogflow (by Google): This cloud-based platform allows users to build voice and text-based conversational experiences for various applications, including chatbots, voice apps, and customer support tools. With built-in capabilities to understand multiple languages and natural conversational flows, Dialogflow leverages Google’s deep learning and NLU advancements for a smooth user experience.

These tools and platforms, while just a snapshot of the vast landscape, exemplify the accessible and democratized nature of NLU technologies today. By lowering barriers to entry, they’ve played a pivotal role in the widespread adoption and innovation in the world of language understanding.

Ethical Considerations

As with any technology that has profound societal implications, Natural Language Understanding comes with its set of ethical challenges to navigate:

Biases in Language Models

Mirroring Society: Language models learn from vast amounts of text data available on the internet. If this data contains biases — and it often does — the models can reflect and amplify them. This can lead to models making stereotypical, prejudiced, or harmful predictions.

Proactive Mitigation: Recognizing this, researchers and developers are actively working on debiasing techniques and fairness audits to ensure models do not perpetuate harmful stereotypes.

Privacy Concerns in NLU Applications

Data Sensitivity: Conversational AIs, whether in customer support or healthcare, can sometimes deal with sensitive information. Ensuring that this data isn’t misused or exposed is paramount.

On-Premise Solutions: Platforms like Rasa, which allow on-premise deployments, can help alleviate privacy concerns by giving businesses control over their data.

The Importance of Transparency and Fairness

Black Box Challenge: As NLU models grow in complexity, their interpretability becomes a concern. Understanding why a model makes a certain prediction can be crucial, especially in high-stakes scenarios.

Open Research and Standards: The push towards open research, benchmarking standards, and transparent methodologies is ensuring that the development in NLU remains accountable and explainable.

Future of NLU

While the strides in NLU have been remarkable, the journey is far from over. Here’s a peek into what the future might hold:

Current Research Frontiers

Continual Learning: How can models adapt and learn over time without forgetting previous knowledge? Addressing this challenge can make NLU systems more dynamic and adaptive.

Multimodal Learning: Combining language with other data forms, like visual or auditory inputs, can lead to richer and more holistic AI systems.

The Role of Advanced Hardware

Quantum Computing: While still in its nascent stages, quantum computing promises to handle complex computations at speeds previously deemed impossible, potentially revolutionizing model training and deployment.

Neuromorphic Chips: These chips, inspired by the structure of the human brain, could offer more efficient processing for neural networks, making real-time NLU applications more feasible.

The Ongoing Collaboration

Linguists and Computer Scientists: The melding of linguistic theories with computational methodologies is ushering in deeper insights into language structure and cognition, propelling NLU forward.

Conclusion

Natural Language Understanding, a field that sits at the nexus of linguistics, computer science, and artificial intelligence, has opened doors to innovations we once only dreamt of. From voice assistants to sentiment analysis, the applications are as vast as they are transformative. However, as with all powerful tools, the challenges — be it biases, privacy, or transparency — demand our attention. In this journey of making machines understand us, interdisciplinary collaboration and an unwavering commitment to ethical AI will be our guiding stars.