How to Add Speech AI Into Your Next.JS App

Bekah Hawrot Weigel

I am a podcast addict. I admit it. From the moment I wake up, I have my headphones in my ears. But there are some times when it would be frowned upon to be listening to a podcast while in a room full of others. In some of those cases, it would be perfectly acceptable for me to look at my phone. So as a compromise, I could grab the transcript of my favorite podcast and read it while in those situations. I know I’m not the only one who’s dreamt of doing that.

From that desire to get away with podcast listening, I put together a basic Next.js and Deepgram web app that allows the user to submit a link to audio, which is then transcribed using Deepgram, and output in the browser. If you too want to get away with reading your podcast, check out the tutorial below.

Getting Started with Repl.it, Next, and Deepgram

Prerequisites

Understanding of JavaScript and React

Familiarity of hooks

Understanding of HTML and CSS

For this project, I worked with Repl.it, an instant IDE that runs in the browser. You can find the final project on my Next + DG Repl and this tutorial will explain how to utilize Repl to create your project.

Getting Started

To complete this project, you’ll need:

A Deepgram API Key - get it here

Getting Started with Next.js

Create a new Repl using the Next.js template. This will give you the basic Next file structure and provide access to the Next.js built-in features. If you’d like to learn more about Next.js, check out the Foundations section of their site. For this project, we’ll be working in the pages folder in the index.tsx file and within the api folder.

We’re going to keep it simple and add our new code to the existing template. You’ll notice that I’ve made some updates in my Repl to link to Deepgram’s documentation, blog, and community, but our code to transcribe the audio will go above that.

In the index.tsx file, we’ll need to create an input for the audio file link, add some useState hooks to handle the submission, transcription, and formatting, and we’ll need a transcribe function. In the api folder, we’ll need to add the server-side code to handle the request to Deepgram. Lastly, we need to utilize Repl’s Secrets feature to handle our Deepgram API key.

Let’s get started with the front-end code in the index.tsx file. At the top of the file, import the required dependencies that aren’t included: React's useState and useEffect hooks.

Before the resources, add a form input for the audio file.

<form >

<label htmlFor="audio-file">Link to Audio </label>

<input onChange={e => setFile(e.target.value)} type="text" id="audio-file" name="audio-file" required />

<button type='button' onClick={transcribe} className={styles.button}>Transcribe</button>

</form>To get this to work, we need to add a hook for setFile. On line 8, add const [file, setFile] = useState(' ');

This will allow our application to keep track of the url to the audio file. Now we need to use that audio file and transcribe it. Grab your API key, and add that into your Repl Secrets.

We’re going to do some going back and forth between files here. Let’s start by creating a new file in our api folder called transcribe.tsx. This will be the path to deal with our server-side logic and to utilize our API key. According to the Next.js documentation on API routes, “Any file inside the folder pages/api is mapped to /api/* and will be treated as an API endpoint instead of a page. They are server-side only bundles and won't increase your client-side bundle size.” We’re going to create an async function to handle the incoming request and the response sent back to the client.

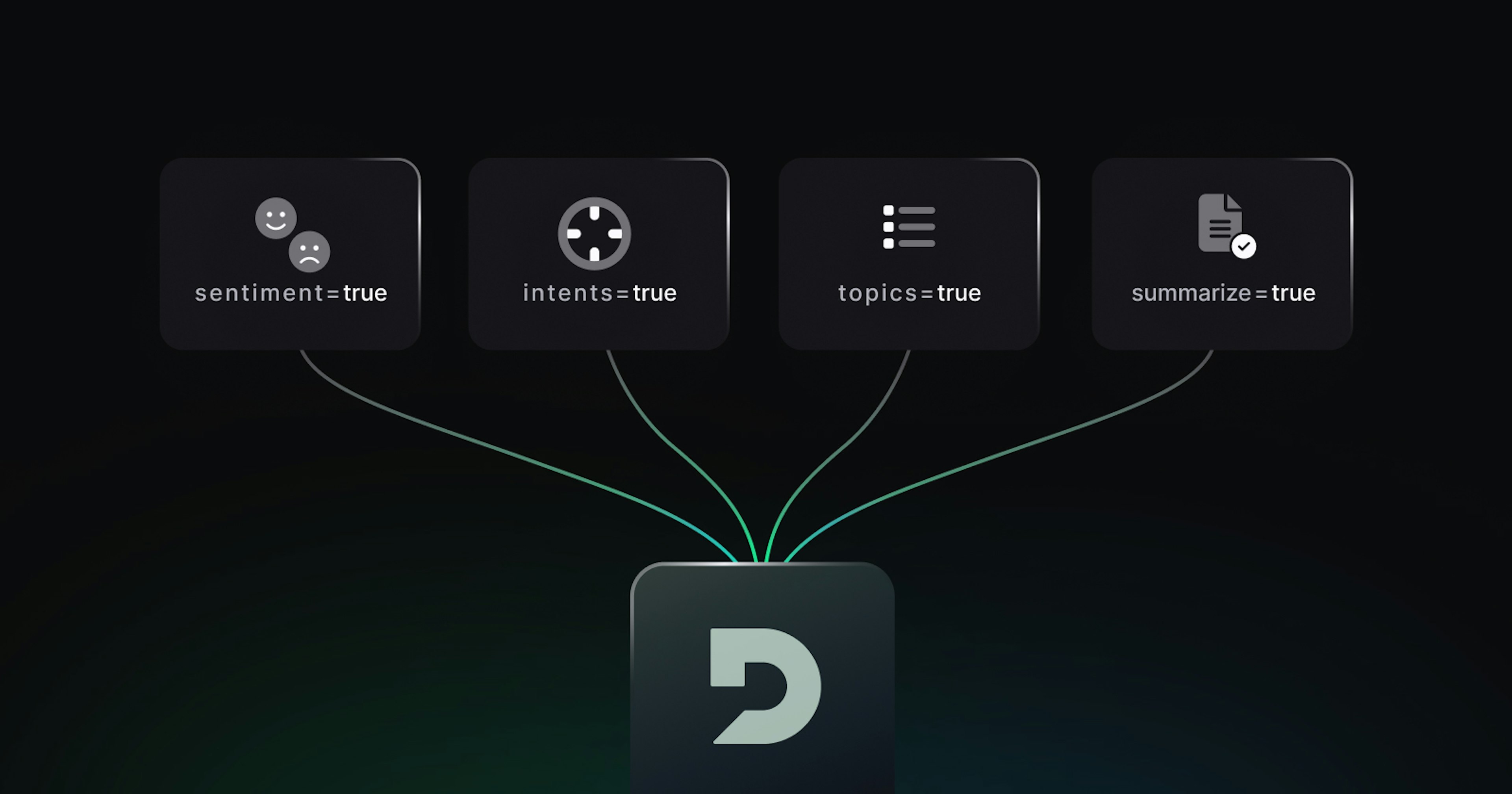

We’ll start by adding the DeepGram API key from the environment variables. Next, we’ll use destructuring to extract the url from the parsed body of the incoming request--which we find in req.body. Now we’ll make a fetch request to make a POST request to the DeepGram API endpoint. We await the response from the API, convert to JSON format, and send it back to the client as the response.

export default async function handler(req, res) {

// Get data submitted in request's body.

const mySecret = process.env['DG_API_KEY']

const {url} = JSON.parse(req.body)

const response = await fetch('https://api.deepgram.com/v1/listen?tier=enhanced&punctuate=true¶graphs=true&diarize=true&keywords=Bekah:2&keywords=Hacktoberfest:2', {

method: 'POST',

headers: {

'Authorization': 'Token ' + mySecret,

'Content-Type': 'application/json',

},

body: JSON.stringify({

url })

});

const json = await response.json()

res.status(200).json(JSON.stringify(json))

}Notice in the API call, we include &punctuate=true¶graphs=true&diarize=true. Because we want to allow for podcast episodes where there is more than one person on the podcast, and we want to make it readable, we add these properties. Diarization allows the transcript to be broken down into different speakers. Now that the server-side code is set up, let’s connect it to our index.tsx file.

Below the setFile hook, let’s create a transcribe function. We need to connect to our api route, which is api/transcribe, send the file , get the response back with our transcription, and store that transcript in a new hook that we’ll call setTranscription, so we can render it on the page. Here’s what the will look like:

const transcribe = async () => {

try {

const response = await fetch('/api/transcribe', {

method: 'POST',

body: JSON.stringify({

"url": file,

})

});

const received = await response.json();

const data= JSON.parse(received)

const transcription = data.results.channels[0].alternatives[0].paragraphs.transcript;

setTranscription(transcription)

} catch (error) {

console.error(error);

}

}Now we need to handle how the text is displayed on the page. To decrease confusion, we should display a new line every time the speaker changes. To do that, we’re going to create a new useState hook called lines and implement some logic to break the speakers into different lines. We’ll conditionally render a div if there’s a transcript. Here’s the code to handle this:

const [lines, setLines] = useState([]);

useEffect(() => {

setLines(transcription.split("."));

}, [transcription]);Here’s the html:

{transcription && <div className={styles.transcript} id="new-transcription">

{lines.map((line, index) => {

if (line.startsWith("Speaker 0:")) {

return <p key={index}>{line}</p>

} else {

return <p key={index}>{line}</p>

}

})}

</div>}If you want to try it out, you can use this sample audio. At this point, our Next.js + Deepgram project should allow you to turn audio files into transcripts. Happy listening!

If you have any feedback about this post, or anything else around Deepgram, we'd love to hear from you. Please let us know in our GitHub discussions .