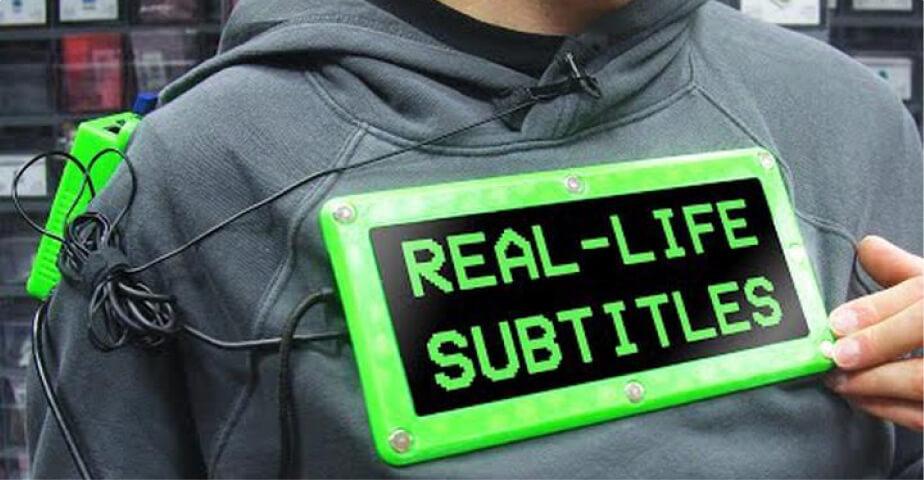

Read My Chest: This Hoodie Subtitles Everything I Say

TRANSCRIPT

Read My Chest: This Hoodie Subtitles Everything I Say

Zack Freedman

Fifteen percent of you are watching this video with subtitles enabled. But if you talk to me in real life, there isn’t a subtitle in sight. this will not stand. The planet might be boiling, cancer might be uncured, but I chose to build a hoodie that subtitles my speech in real time. Let’s hook some AI to a wearable pie and see how yours truly transformed himself into the world’s most narcissistic teletubbie. Ladies gentlemen cyborgs and Mark Zuckerberg’s virtualized connectome welcome to Voidstarlab. I don’t hone in on project ideas right from the gecko for all intensive purposes, I come up with my ideas before the time is ripe to build them. I made myself a dumping ground for the project ideas too zesty to discard, but too * to use right away. And I call it big * list of * ideas. It’s a gray area of *, which technically makes it a beige area. and it’s where ideas can ferment until some x factor transforms them from half assed to fully assed.

This little nugget popped out of a chance encounter that only half in because I am a noodle armed soy boy. When I built the snapmaker, I needed something to put it on, so I went to Home Depot to grab myself a nice solid workbench. Shortly after buying the workbench, I realized it was too solid. It didn’t fold or break down or anything. I tracked down a real man and tried to explain the situation, but he gestured to this little badge on his apron that red hard of hearing. My face was diapered and my American sign language is mostly profanity, so we were forced to communicate via eyebrows alone. The whole encounter made me realize how much it must suck when you rely on reading lips, but everyone’s either covering their mouth or a Karen. I mean, like, they are a Karen, not like, they’re wearing Karen on their face like a mask. Although, if I were to hypothetically mount a display at nip level, a mic, add some speech recognizing AI, then everyone, no matter who they are, could receive my every word, whether they want it or but that would involve writing a lot of code and a hate writing code, especially a lot of it, onto the video list it went.

A few months later. A startup named Deepgram reached out and commissioned the royal us to showcase their technology by building it into a silly project. Deepgram is a cloud hosted speech-to-text service who provides two APIs, one for prerecorded audio, one for streaming. you can upload an audio file in almost any format and Deepgram’s typewriter monkeys will transcribe it. Or you can stream audio as it arrives and get a continuous conversion. I am going to be completely honest. I mixed them up with a different startup and by the time I realized that I was working with the speech to text startup and not the 3D scanning startup, I’d already signed a contract. This isn’t even the first time happened. I once screwed up a hackathon project because I mistook the client for, and I am being absolutely serious here, their identical twin.

I’m drinking Stranahan’s in the bath. Don’t judge me. And I realized this idea had ripened like a fine cheese. It’s a process I call parmesanovation. See, Deepgram already wrote the hardest part of this idea, the speech recognition, as for putting electronics in a hoodie. I struggle to not put electronics in my hoodies. The points I’m making here are a. Ideas can wait till the right time to build them. b, I came up with this idea well before I got in touch with Deepgram, so I’m not a shill and c. Local single malt is a business expense. This stretch bar display is a leftover from the data blaster cyber deck and it is the perfect aspect ratio for dropping a sentence or two atop my man cleavage. For input, we’re gonna use a lavalier mic from the early days of the channel. It’s tiny, easy to hide, and it’s got a directional pickup. So less interference from other people in the conversation if I ever give them an opening.

The brains shall be the Raspberry Pi 3B+. It’s got onboard graphics, WiFi, and Python. And most importantly, I’ve already used them in like a brazilion products. Projects. Blah. I grabbed myself a hoodie at goodwill, doesn’t need to be anything special, just needs to fit a little more snugly than usual, so the display doesn’t flop around. Also, raspberry pies, do not have an audio input, so I hit up Micro Center for a Codec Zero, a shield style sound card with pirst farty support on PiOS. I got a shout out Micro Center for not only sponsoring a lot of the parts for this project, but also for providing VoidStarLab with a DJI RSC2 Gimbal so we can get less nauseating handheld shots. Micro Center, if you’re not aware, is the last meat space electronics retailer on Earth. and you can restock your filament, grab an individually addressable RGB pro gamer power cable and to impulse buy a two thousand dollar graphics card in person after touching it, reading the text on the back of the box. Porch pirates can’t swashbuckle your little raspberry pi gaming PC cooling tower if you bring it home yourself. Microc Center allowed me to wear this project to their store and film consenting customers and gold bricking employees. But let’s not lick the wrong end of the ice cream cone. We have to build the project first.

I jumped straight into modeling and printing enclosures. I figured there’s a higher chance that the electronics are gonna short out on desktop clutter, than of me switching them after all, if I have to order parts this project is gonna take forever. These oversized holes are designed for special fabric fasteners called Chicago nuts, more on deez nuts later. After a late screwing fnar fnar, the project looked feature complete. I talked about this in my Finish Your Projects video, sequencing the project this way gives us an emergency exit. We can end the project right here. I can just hang this all around my neck with a string, display some random gibberish on it, and I have something to show. Don’t put yourself in a position where you have to finish the whole project or you got nothing. This is the first project in a very long time that was just a bunch of off the shelf products being used for their intended purposes. This thing came together way too easily and it is creeping me out. My comfort zone is barely contained to existential dreads, so let’s return to it by writing some code.

Warning. Falling section contains programming. Viewers who find computer words boring are advised to skip to the next chapter. Unless said viewers also like watching my sanity, visibly discreet with each passing minute, which case, grab a box of Junior Mints and then the lights.

I like to start a project code by writing separate tests for each component, ideally in Python because it’s the only language that’s better than widget workshop.

I installed Pi Audio and confirmed the codec zero can, you know, work. I found myself an old school on screen display font, and I made sure the Pie game graphics library could do the job. Finally, I loaded up Deepgram’s Python SDK, provisioned my myself an API key and I tested that too. Their example code transcribes BBC Radio a la minute, which I gotta say is a pretty clever source of demo dialogue even if those Brits don’t know how to spell the word dialogue. Once we have known working code running on known working hardware, we can say now kiss, combine our scripts, stir in some business logic and watch everything go to hell in a controlled manner.

For this project, I am the content. So instead of streaming audio from the web. We got a stream audio from the face. I’ve never actually done any audio input on a pi before, so I expected the worst, but it turned out to actually be the easiest part of the project. The Pi Audio module lets you access the audio driver and that uncorks a torrent of raw wave data. We dump that straight into Deepgram’s API, zero processing required. It’s like putting the end of the toilet paper roll in the toilet and flushing it. parents, that is what you get for letting your kids watch my show. I’m not babysitter.

I’ve never actually done any audio input on a pi before, so I expected the worst, but it turned out to actually be the easiest part of the project. The Pi Audio module lets you access the audio driver and that uncorks a torrent of raw wave data. We dump that straight into Deepgram’s API, zero processing required.

Zack Freedman

But did we finally get an easy project? Of course not. Everything is hard all the time. By default, Deepgram’s streaming API is not actually real time. Their algorithms use context clues like the humans do, so the server waits till you finish a thought. and then it crunches a statement. But my tongue train never slows down and by the time my pecs got something to show, I have already rambled into another dimension. Deepgram’s solution is interim transcriptions and there is a reason why they disable it by default. When you flip it on, the server sends you the work in progress, which gives you something to show as ASAP as possible. The downside is that it’s guesswork. The system is gonna rewrite that transcript over and over again as the sentence develops. Now we have to account for the subtitles retroactively changing. and it gets worse. The screen only fits a few lines at once, but I speak I’ve been told quite fast. No matter how quickly I spew projectile word vomit code has to keep each word on screen long enough that you can read it. If the transcription changes, we have to restart that timer to give the listener slash viewer slash victim a chance to catch up. But only if the text that changed is visible and it gets worse. An audio sample is just a snapshot of sound at an instant in time. So if we’re too late, we lose it forever. Whenever we ask the sound card for a sample, we send data to Deepgram, we set a timer, or we do anything else that takes time and uses the system. Our code needs to get it rolling, ditch it, do something else and hopefully remember to come back and collect the results. The program flow has to zoom back and forth from task to task like a dude on meth or dude on ADHD without meth.

So to make the Deepgram results look and feel like real subtitles, I had to write over six hundred fifty lines of complex, frustrating malarkey that I barely understand and I even added comments. I know some * psychopaths enjoy programming, so feel free to head into the GitHub repo and critique my cringingly crappy concurrent code. At least it was easy to, like, get this thing to run automatically. I just created a system d service that calls my Python script on boot and it restarts it if it crashes because I wasn’t asking.

Now that I had the technology, I had to make it wearable. I did the final fabrication during one of my Twitch streams and chat convinced me to try the hoodie on before I bolted on my irreplaceable electronics. It turned out to be a little restrictive about the chest. Thankfully, Brooke is a straight up G and raided Target for more hoodies like I should have done in the first place. To mount the stuff, I printed a template, cut out some holes and busted out the Chicago nuts. These are screw fasteners with broad heads and a long smooth shaft and that makes them perfect for mounting 3D prints to fabric and leather. You’ll notice the two halves of the display enclosure actually clamp the hoodie fabric in between. The original plan was to cut a big old hole, but those Twitch chat bastards came up with this idea and they were right again. How am I supposed to look smarter than my audience when I’m objectively not. I shouldn’t have done video essays.

I mounted the pi on my shoulder to add that jankalicious aesthetic, really sell the idea that this is not a commercial product. The angry pixies are imprisoned in a USB power bank and I tucked that into my pants pocket because this is becoming a bit of a heavy hoodie. I ran some cables. I tucked the lapel mic into the hood. I hit the power button and the prototype was done. I put out the call to our patrons. I headed to Micro Center donning my new hoodie, and I connected it to their guest Wi Fi for some parasocial prototype field testing.

I I kind of assumed a real world subtitles would be borderline unusable. But IRL, the accuracy was surprisingly high. It turns out that when I speak off the cuff, I use a different vocabulary and cadence that’s more compatible with Deepgram’s speech model. We did have a nice boo-boo where I was hacking and it made Brook laugh hard enough to shake the gimbal. our directional mic turned out to be more directional inspired. So the hoodie ended up subtitling both sides of the conversation. Deepgram has an option to identify different speakers, but I just didn’t know this at the time. This turned out to not actually be a bad thing. People really got a kick out of talking really loud at me and seeing their speech show up too. We also saw a surprising and positive social dynamic that I wasn’t expecting. The chest mounted screen gave people license to look at my hoodie instead of looking me in the eye, which I think made a lot of people who normally would never strike up a random conversation, feel more comfortable. The bright colors, the chunky enclosures, drew a good amount of positive attention, and as soon as some words appeared, most people got it. the time we put into nailing the instant feedback turned out to be absolutely critical in selling the fact that these were real time subtitles.

The service is actually returning results so quickly, people thought that I had, like, recorded it in advance.

Zack Freedman

The service is actually returning results so quickly, people thought that I had, like, recorded it in advance. It wasn’t perfect. The dangling wires constantly threatened to snag on stuff. Never managed to get the pi’s low voltage warning to go away. And we also got spinked by a bug that hangs the program if I stopped talking for a few minutes. It wasn’t a game breaker. The workaround was just never stopped talking. I was gonna do that anyways. The hoodie itself is a lot more comfortable than it looks. but I didn’t expect the glare to be so powerful. It turned out to like ruin a whole bunch of our footage and I really should have put like a matte screen protector or something on it.

These are the types of bugs you just cannot figure out on the bench. You have to take it out into the real world and get your butt kicked a little. All in all, this was a fun little project and forced me to learn asynchronous programming, web APIs, and made me leave the lab despite my best efforts to avoid all of those.

I’d like to thank Micro Center for letting me strut around like a radioactive peacock and thanks to Deepgram for sponsoring the build and its video. Check the description to see all the parts I used in this project and learn how easy it is to get talking with Deepgram. Major kudos to our patrons. We got an incredible amount of support from the grid affinity video and not only does it mean a lot that people enjoy my work enough to support it, but that support also improves future projects. Our highfalutin collaborators include command Chuck Fiduc small dong, Brian D’S folded nutcaster, the Catboy, Jeremy Arnold, sweaty vag and Reagan. I hid their names somewhere in this episode, but where where? Deepgram’s speech to text is going to absolutely Eviserate, our poor lab assistant supporter silly names. And I don’t get to see how badly until I cut the video. Let’s watch it mingle ether in Gomes, Ryan Gueller, Bob Dobbington, zzzzz s p, truck who’s in Florian, Burberserved nothing wrong yet. DSA, a Zonda, wielder of iron age of shrink. Trans writes, Kevin McGrath, Nicholas Eckhardt, GoodSuck, error rateer, Roger Pinkham of the Great Star Theater, Burund documentary, Brad Cox, Protagonist, powerful CCH, Granville Schmidt. My dog is a bear. Max luck says, if you can’t fix it, you don’t own it. Bagel, e fun man, vodka, Ashley Coleman, Sir Durbington of Durbotopia. Eddie, one handful of beans, bill schooler, rusty flute, Good lady Nat Queen of Lemons’ Victor of the great citrus warriors. Period clots, cats, isekay elf, machiro, Chandesuna. Michael Roche, Storm B design, Talend Democratic socialist, and a pretty righteous dude, Dash Zach, Bradford Ben Achilles, a very silly vixen. the world’s greatest drone pilot, Bockgrinder FPV. Nani? Gary Duval, Philip, the cusslefish, Lydia Kay, Nino Gantz tano, Nathan Johnson, t k m k, Thomas B Meyers, Vandaifa. It’d better be able to pull this one off, Boulder Creek Yard James. Thanks for watching, and I hope you see what I’m saying in the future.