Trained on 100,000+ Voices: Deepgram Unveils Next-Gen Speaker Diarization and Language Detection Models

Josh Fox

TL;DR:

Deepgram’s latest diarization model offers best-in-class accuracy that matches or beats its best alternatives. In addition to outstanding accuracy, our language-agnostic diarization model processes audio 10 times faster than the nearest competitor.

We’ve revamped our automatic language detection feature resulting in a relative error rate improvement of 43.8% over all languages and an average 54.7% relative error rate improvement on high-demand languages (English, Spanish, Hindi, and German).

Reducing confusion where it counts: New Speaker Diarization

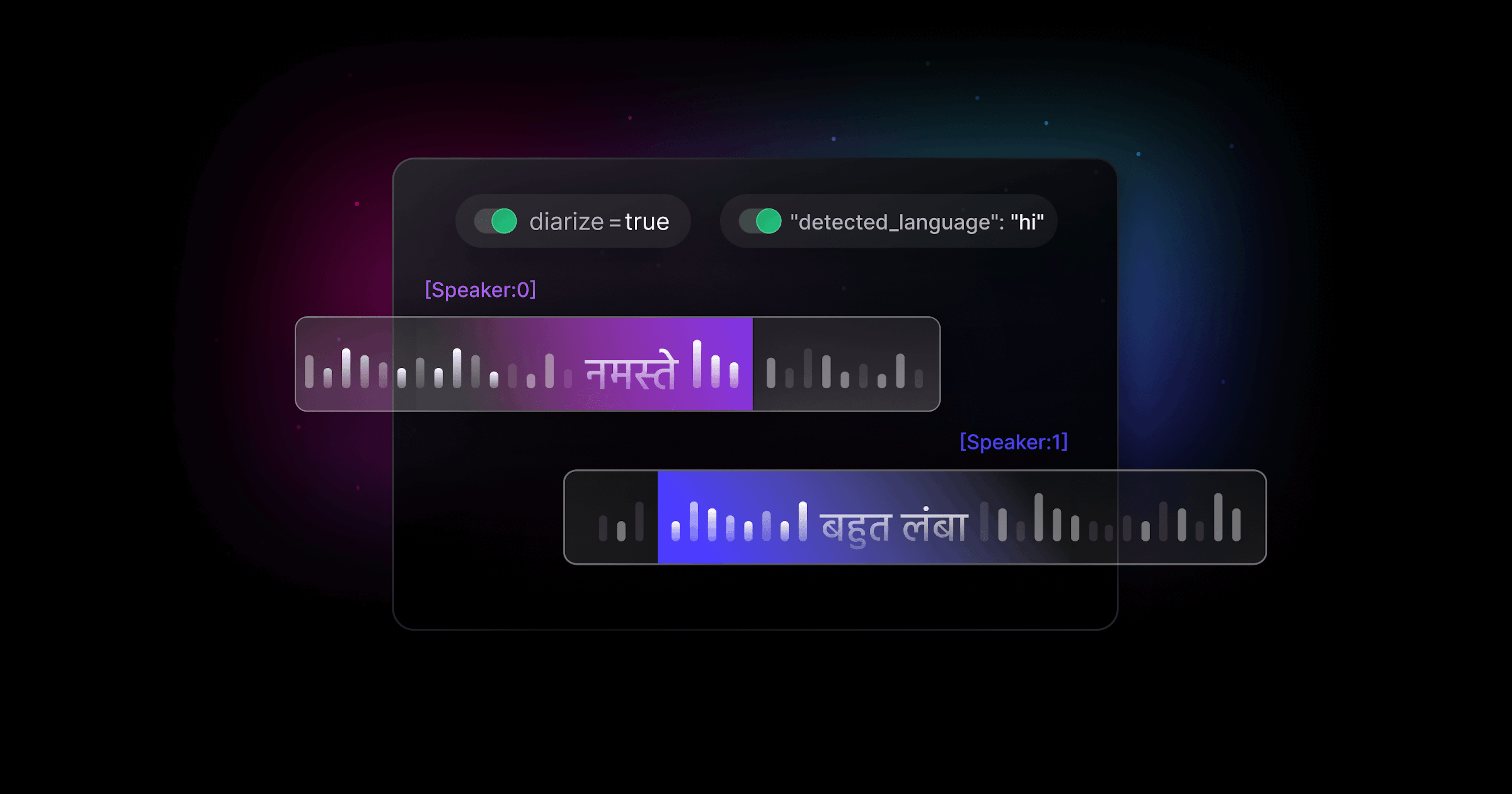

Today, we’re releasing a new architecture behind our speaker diarization feature for pre-recorded audio transcription.

Compared to notable competitors, Deepgram’s diarization delivers:

53.1% improved accuracy overall from the previous version, meeting or exceeding the performance of our best competitors.

10X faster turnaround time (TAT) than the next fastest vendor.

Language-agnostic support, unlocking accurate speaker labeling for transcription use cases around the globe. Language-agnostic operation means that no additional training is required or changes in performance will occur as Deepgram expands language support in the future.

Speaker diarization—free with all of our automatic speech recognition (ASR) models, including Nova and Whisper—automatically recognizes speaker changes and assigns a speaker label to each word in the transcript. This greatly improves transcript readability and downstream processing tasks. Reliable speaker labeling enables assignment of action items, analysis of agent or candidate performance, measurement of talk time or fluency, role-oriented summarization, and many additional NLP-powered operations that add substantial value for our customers’ customers.

Our speaker diarization feature stands out with its ability to work seamlessly across all languages that we support, a key differentiation from alternative solutions, and especially valuable in multilingual environments such as contact centers and other global segments where communication frequently occurs in multiple languages.

Today’s performance improvements provide significant benefits to end users in two ways. First, these upgrades significantly reduce costly manual post-processing steps, such as correcting inaccurately formatted transcripts. Second, they increase operational efficiency by accelerating workflows, now enabled by faster turnaround times for diarized ASR results. From improved customer service in call centers to better understanding of medical conversations, this leads to better outcomes and increased ROI for your customers.

Our Approach: More voices, without compromise

At a high level, our approach to diarization is similar to other cascade-style systems, consisting of three primary modules for segmentation, embeddings, and clustering. We differ, however, in our ability to leverage our core ASR functionality and data-centric training to increase the performance of these functions, leading to better precision, reliability, and consistency overall.

Speaker diarization is ultimately a clustering problem that requires having enough unique voices in distribution in order for the embedder model to accurately discriminate between them. If voices are too similar, the embedder may not differentiate between them resulting in a common failure mode where two distinct speakers are recognized as one. Out-of-distribution data errors can occur when the training set was not sufficiently representative of the voice characteristics (e.g. strong accents, dialects, different ages in speakers, etc.) encountered during inference. In this failure mode, the embedder may not produce the right number of clusters and the same speaker may be incorrectly given multiple label assignments.

Our diarization accurately identifies speakers in complex audio streams, reducing such errors and resulting in more accurate transcripts through a number of intentional design choices in our segmentation, embedding, and clustering functions and our groundbreaking approach to training.

To overcome even the rarest of failure modes, our embedding models are trained on over 80 languages and 100,000 speakers recorded across a substantial volume of real-world conversational data. This extensive and diverse training results in diarization that is agnostic to the language of the speaker as well as robustness across domains, meaning consistently high accuracy across different use cases (i.e. meetings, podcasts, phone calls, etc.). That means that our diarization can stand up to the variety of everyday life — noisy acoustic environments, ranges in vocal tenors, nuances in accents — you name it.

Our large-scale multilingual training approach is also instrumental to achieving unprecedented speed, since it allows us to employ fast and lean networks that are dramatically less expensive compared to current state-of-the-art approaches, while still obtaining world-class accuracy. We coupled this with extensive algorithmic optimizations to achieve maximal speed within each step of the diarization pipeline. As with Deepgram’s ASR models writ large, the value of our diarization is speed and accuracy. There is no limit to the number of speakers that can be recognized (unlike most alternatives), and the system even performs well in a zero-shot mode on languages wholly unseen during training.

Figure 1: Deepgram Diarization System Architecture