LLM Training: From Data Ingestion to Model Tuning

Nithanth Ram

A chef is only as good as their ingredients allow them to be. Even the Michelin-touting Gordon Ramsays of the world exist in their culinary echelon largely due to using the highest quality ingredients, equipment, and techniques. Training and refining their skills over time makes them highly capable of whipping up some great food using a variety of ingredients.

Much like a chef, a large language model’s (LLM) performance is largely derived from the quantity and quality of their training data. LLMs have demonstrated the propensity to become more capable as they grow in size, discovering more latent abilities at larger orders of magnitude. However, the natural language data consumed by these models during training doesn’t directly translate to robust generative outputs. The output of these monolithic models is only as good as what’s ingested by the model at its inception. If you put garbage in, you’ll get garbage out.

Data ingestion by LLMs, as a concept, has been largely glanced over by a broad portion of the generative AI audience. Just like how LLMs’ capabilities are sometimes chalked up as black(box) magic, data ingestion is also articulated in a way that’s far easier said than done. To the layman, LLMs seemingly ingest a bunch of natural language text data during training through some esoteric abstract function.

The reality is that data ingestion is a much more complex and difficult process. LLMs need an interpretable, well-structured corpus of natural language data to kickstart their training process. Data, in this multifaceted age of the internet, is anything but uniform. It’s messy, complex, and tangled. This makes trying to piece together a structured corpus of training data a tall task and emphasizes just how important data preparation is in the LLM stack and pipeline. Let's take a further dive into what the LLM data ingestion process entails, or how the LLM “sausage” is really prepared.

Collection/Curation

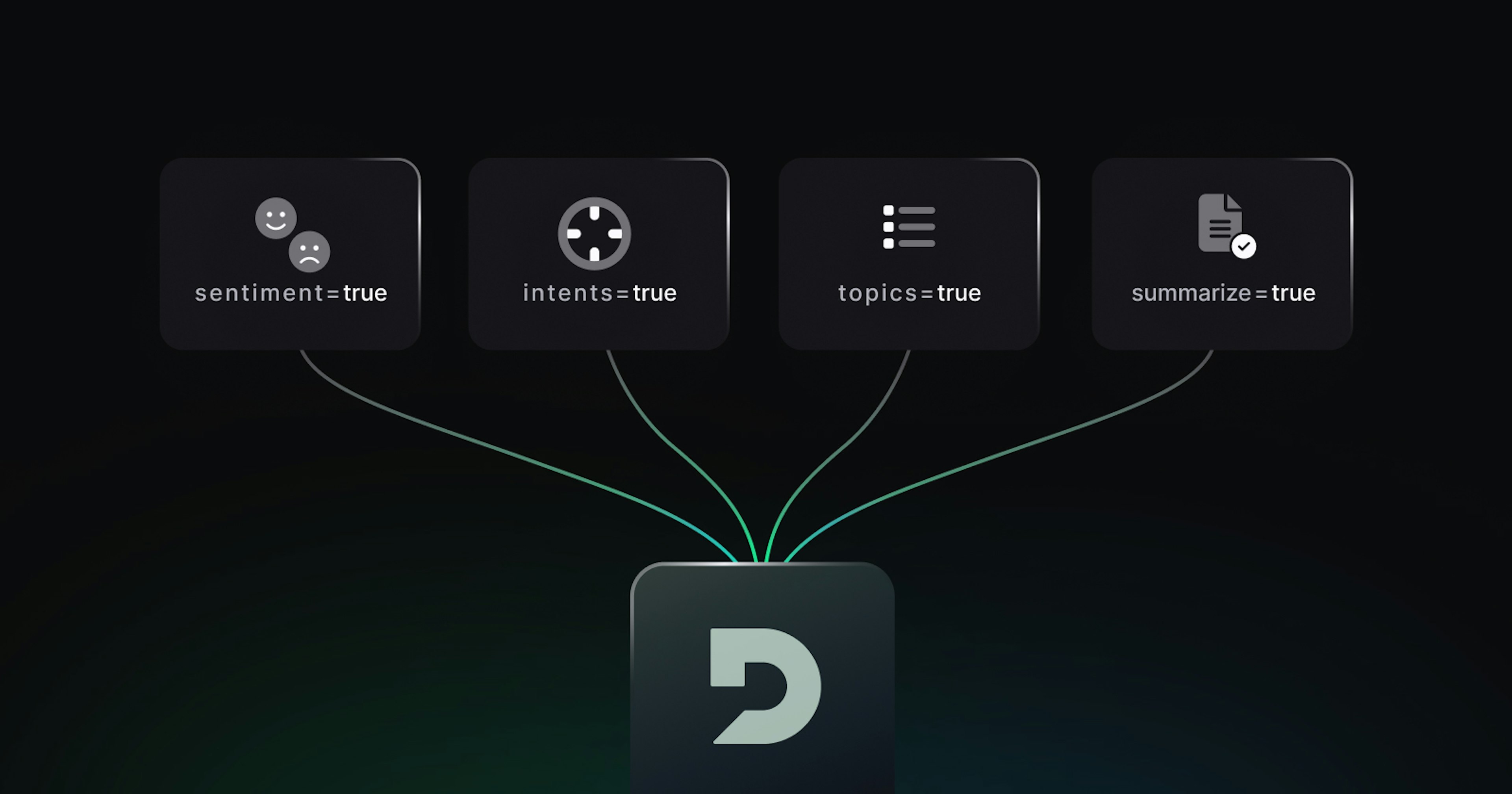

At the start of the data ingestion process is the collection and curation of the data that will be fed to the LLM. Massive open-source datasets like Common Crawl and the PILE exist because of text scraped from the internet using web crawling tools. Companies like OpenAI and Deepgram rely on these aforementioned vast datasets to train their models. Aggregating a large quantity of data is the objective here if the desired model being trained is a foundation model. Since scale is correlated with performance, the focus is gathering any sort of text data that will make the LLM generalizable for a wide variety of prompts. However, if the LLM needs to be finetuned or domain-specific, it’s critical to search for a subset of data that matches the task.

For example, codegen models may want to cover a domain like Github and crawl over repositories’ comments and code files to become better aligned to programming tasks. The data collected needs to be relevant to the task the LLM is being trained for. The quality of the data is also assessed and reviewed to an extent to see if there are any statistically significant skews or obvious biases in order to prevent these aberrations from propagating downstream in the training process. Some data collection processes often employ a filtering tool or API to avoid any toxic content from large open-source pre-trained datasets (ex. bad words). The researchers that put together the PILE dataset used tools with email spam heuristics to categorize harmful content in the dataset. Filtering a dataset for toxic and biased content will assist the downstream process of training an LLM, but it should also be done meticulously to avoid any oversight of blocking out minority perspectives. The sources and ethical constraints of the data collected also need to be strongly weighed due to user issues like privacy, intellectual property rights, etc. A maligned LLM is somewhat avoidable or alleviated at the initial phase of data ingestion.

Data Preprocessing

If the collection of datasets was like our chef gathering all the necessary fresh produce for their kitchen, then preprocessing would be preparing these ingredients to get them ready for the meal rush.

Data as stated before, specifically natural language data, can be very messy in its original format. The automation that goes into scraping for these datasets can sometimes rely on very lazy heuristics that result in weak samples of data. These samples need to be cleaned and normalized before being fed to a language model. Characters like whitespace, punctuation, and errors in the data source all need to be handled before being passed in as training data.

Fortunately, there are libraries and frameworks that contain functions to do just this.These endpoints comb over a vast collection of data and perform transformations on them that normalizes them for LLM ingestion. These can involve removing irrelevant information like HTML tags, advertisements, and boilerplate text from scraped text. Text normalization techniques are also applied to handle issues like spelling variations, contractions, punctuation marks, and uppercase characters. During preprocessing, the validity and quality of the dataset is ensured to be maintained downstream in the training process.

However, feeding these models a constant stream of natural language data is not an optimal way for the model to learn the corpus during training. In fact it probably would result in horrible performance with the model displaying signs of very low semantic understanding.

Instead, text data is tokenized. This process involves breaking the text into smaller units or tokens, which the model can then better sequence and predict during training and inference. Tokens can be words, subwords, or even characters, and they set the granularity at which the model learns and processes text. The specific tokenization strategy employed during training depends on the architecture and design of the language model. Models like Word2Vec and GloVe often tokenize at the word level, where each word is treated as a separate token. On the other hand, models like BERT and GPT-3 utilize subword tokenization techniques, such as Byte-Pair Encoding (BPE) or SentencePiece, which can represent words as well as subword chunks.

Models can’t just understand and associate words like we do currently. They need to synthesize a relationship between these words and a language which they can understand: numbers. Tokenization helps to split up large streams of training text so that models can then proceed with vectorizing and “embedding” this data to better understand and generate responses during inference.

Ingestion and Training

Now that the ingredients have been chopped, diced, julienned, etc. it’s time to start cooking and assembling them into the food that’s wanted.

Once the training data has been tokenized, it is fed to the model to be ingested for training. During training, the tokens are converted into numerical representations known as embeddings. These are vector representations which encode the meaning and relationships between tokens, facilitating a language model's understanding of natural language. These numerical representations are embedded in a vector-space so that LLMs are able to perform vector operations a la techniques like cosine similarity to generate relevant outputs.

Embeddings capture the semantic and contextual information of each token in a dense vector format. The model learns to predict the likelihood of a word appearing in a given context based on the words that come before and after it in a sentence, capturing the semantic relationships between different words in the corpus. The language model's parameters are adjusted to optimize a specific objective (numerically with the use of a loss function), such as predicting hidden words in a sentence (masked) or predicting the next word in a sequence (causal). During the training of the LLM, its weights are iteratively updated while learning the vector-space of these embeddings so that it has a semantic understanding of the relationship of the natural language in its training corpus as well as the textual input to the model downstream post-training.

The dimensionality of embeddings can vary depending on the architecture of the language model. Commonly, embeddings have several hundred dimensions, allowing them to capture a rich range of semantic and syntactic properties of words and phrases. Embeddings are often trained jointly with the rest of the language model, enabling the model to learn both the task-specific representations and the underlying language structures simultaneously. Once the language model has been trained and the embeddings have been learned, these representations can be used to perform various NLP tasks, such as text classification, sentiment analysis, machine translation, or question answering.

The Significance of Data Preparation

So now we understand a little bit more about how complex and meticulous data preparation can be. The LLM “sausage” is hard to prepare just like our metaphorical chef putting in the prep work before the meal rush. But taking a step back, why is data preparation so important for foundational large language models? Aren’t they just supposed to take in a vast variety of data from all corners of the internet to function properly?

It’s important to ask: What do you want your model to be capable of? Is it one of these large-scale foundation models that is supposed to be capable of nearly everything? In that case you’ll need a sample of basically everything so that your model is largely generalizable to any prompted inputs it is presented with.

However, if you want a domain-specific model and you need to fine tune for a specific task, you’ll need to distill the knowledge of an LLM into a training set for a smaller one. For example, exposing a GPT model to multiple sources of financial data and prospectuses in order to improve reasoning and entity recognition capabilities around finance topics. Here, the model’s functionality and efficacy is heavily reliant upon how the data is collected and cleaned so that it can ingest it without any errors. As stated before, garbage in equals garbage out. The curation and normalization of a dataset, whether for training a foundation model or fine tuning an existing model, needs to be streamlined properly so that any structural complexity and messiness of the collected data doesn’t propagate forward in the training process and exacerbate the model’s performance.

At a large scale, data can be incredibly messy as mentioned before. There are so many sources of natural language data that it’s hard to remain even-keeled when dealing with organizing and cleaning a large dataset meant for an LLM. Untangling this binary ball of yarn is especially difficult when considering large organizations who may not have the means to keep all of their data clean, organized, and normalized.

Take the government for example. There are many different sectors, many different groups, each group has their own hierarchy. It’s folly to think that they can easily maintain data in some magical tabular format so that an LLM could ingest their data and run for president next year. Multiple divisions probably have different infrastructure, compliance rules, modalities of data (text, video, data) each of which probably have different file-format-specific aspects to them. It’s incredibly difficult to hack together data in organizations like this to be LLM-ready.

Companies like Unstructured.io understand the complexity and challenge this problem brings forward to builders in the LLM space. They aim to minimize the challenges of working with fragmented data and streamlining them into clean JSON outputs to be more easily ingested by LLMs. To see just how crucial data preparation tools are to the success of LLM builders, take a look at how much proliferation the open-source Unstructured library has had, with over 150k downloads and use in over 500 open-source repositories. Having open source libraries like the Unstructured API, that can help connect the seams across a complex data hierarchy, will only enable more organizations to leverage the power of LLMs to supercharge their operations.

The new LLM stack that’s being slowly crafted largely relies on models that are being trained, updated, and provided by large organizations. Training an LLM from scratch is probably not a realistic path for people not named OpenAI, Google, MosaicML, etc. but fine tuning and making one more domain-specific is. For this reason, data preparation is now one of the most crucial parts of the LLM development process. Any good chef will tell you that a great dish starts with preparation, and it’s clear that the rules for LLM mise en place are already taking shape.