How To Keep Up With Advancements in AI

Zian (Andy) Wang

The field of Machine Learning is ever-evolving, with a constant stream of new developments emerging from research labs, tech companies, and academic institutions around the world. Each day, it seems there's a new algorithm to learn, a novel model architecture to grasp, or an innovative application to explore. This wealth of information includes releases of cutting-edge software tools, announcements of significant breakthroughs, and a plethora of newly published papers that push the boundaries of what's possible with ML. It's both an exciting and overwhelming time to delve into the world of ML, as the pace of innovation is truly breathtaking.

Figure Out Your Interest

As with any vast field of study, the first step to effectively following ML content is to identify your areas of interest. ML is a broad domain with numerous subcategories such as Supervised Learning, Unsupervised Learning, Reinforcement Learning, Deep Learning, and more. Each subfield comes with its unique set of techniques, applications, and nuances. Therefore, understanding which of these areas piques your curiosity the most can help streamline your educational journey.

On the other hand, your goal might not be tied to a specific ML subcategory. Perhaps, you're more interested in staying abreast of the latest advancements in the field at large. If this is the case, a broader, more general approach would be more suitable. Alternatively, you may be captivated by the architectural aspect of ML, always on the hunt for the latest and most efficient model architectures.

All in all, there’s always a tradeoff between the level of detail that is possible to digest and the range of topics that can be closely followed.

Your technical predilection for the type of content you consume is also a crucial factor to consider. Are you a technical enthusiast who relishes the intricate details of the ML models and algorithms? Or do you prefer to stay updated with the high-level news, understanding the impact and applications of ML in everyday life? Knowing your preferences and aligning them with your learning is vital to effectively absorb the vast ML literature.

With that being said, no matter your interests, areas and technical proficiency, there are patterns and “rules of thumb” that can be outlined to efficiently and effectively keep up with the academic literature.

Quantity Over Quality

In a paradoxical twist to conventional wisdom, the key to keeping pace with academic literature, especially in the dynamic field of machine learning, often hinges on the philosophy of "Quantity over Quality." This principle doesn't suggest that we diminish the importance of the quality of the information we consume. Instead, it encourages maximizing exposure to a broad spectrum of topics, then selectively honing in on those most aligned with your interests and objectives.

Many beginners in the field, eager to master every nuance, may find themselves tumbling down a rabbit hole, trying to unravel each intricate detail of a particular concept. This approach can quickly consume hours, leaving you with a daunting array of new concepts to decipher and potentially, a sense of being overwhelmed. It's essential to note that while an exhaustive understanding of certain concepts may enhance the comprehension of an article or paper, more often than not, a high-level grasp of an idea is sufficient. Moreover, on many occasions, you may find yourself in the same place despite hours spent on a topic that you are not adept in.

As you navigate through an array of publications, two guiding questions can serve as a barometer for your understanding:

Do I understand how this concept contributes to the overarching purpose?

Do I understand why the author is using it?

These questions target the core purpose and the rationale behind the application of any given approach or paradigm. For instance, understanding why a specific machine learning algorithm is chosen for a task involves recognizing the unique features of the algorithm that make it suitable for the task at hand. Similarly, understanding the absence of a concept can also be enlightening. If a certain technique or approach wasn't used, what would be the impact on the overall project or study?

If you can somewhat answer both of these questions, you likely have some understanding of its role within the larger scope of the work. This context is usually sufficient for most cases, and digging deeper might not yield significant additional insights, unless the topic is directly related to your specific area of focus.

Places to Look

Generally, there are two types of places on the internet that will provide you with the latest advancements in machine learning: community-based forums and professional publications. Note that the following list will not be all-encompassing but should rather be treated as a starting list of websites and publications to follow for keeping up with the rapidly changing field of ML.

Community-Based Forums

These websites and/or forums allow anyone to post their findings or opinions, so certain perspectives and speculations should be taken with a grain of salt.

Subreddits

Reddit, with its 1.6 billion active users per month, is one of the biggest internet forums. When it comes to machine learning, there are countless subreddits covering every possible corner of the field. Furthermore, Reddit serves as an exceptional platform for not only staying informed about the most recent developments you might have missed, but its community also excels at uncovering lesser-known, yet uniquely captivating news. Among the multitude of machine learning and data science-focused subreddits, there are a few popular ones that offer a wide range of information and discussion.

Hacker News

Hacker News, an offshoot of Y Combinator, offers a unique blend of technology and entrepreneurship, providing a distinct perspective on machine learning advancements beyond simply the technical side. The site operates through user-submitted stories, which are then voted and commented upon by the community.

Although not solely dedicated to machine learning, Hacker News covers a wide spectrum of tech-related topics, including ML. The diverse threads under "Ask HN" and "Show HN" can inspire new interests and side projects. However, given its user-generated nature and broad focus, adopting the "Quantity over Quality" approach is advised here as well.

Research Hub

Research hub is a forum-based website that allows anyone to publish their research or outlooks without barriers. It is similar to Reddit, but purely dedicated to academic research. Additionally, it has a currency to allow for bounties, donations, and voting rights, similar to StackOverflow’s reputation system.

On the forum, you will likely encounter many interesting papers that cover topics that are not as mainstream, but just as interesting and important to be examined and studied. Additionally, unlike Reddit, there are people peer reviewing published papers and videos to ensure quality and accuracy.

Publications and Feeds

The following publications are more technically-inclined and will appeal more to those striving to squeeze every last bit of detail out of the latest computer vision model or those that want to dive deep into the new training method for LLMs.

arXiv

When it comes to keeping up with ML literature, arXiv is an invaluable resource. This open-access repository is where researchers often upload preprints of their work—versions that have not yet undergone peer review. Unlike the websites and forums mentioned above, papers published on arXiv are generally directed more towards a niche area in a larger field, although almost every day they will provide some unique ideas and methods that haven’t been attempted before.

Here are some popular subsections on arXiv to follow for the latest ML related publications.

stats.ML (Machine Learning)

cs.LG (Machine Learning)

eess.SP (Signal Processing)

cs.CV (Computer Vision and Pattern Recognition)

cs.AI (Artificial Intelligence)

cs.CL (Computation and Language)

IEEE

Another notable repository for ML literature is the Institute of Electrical and Electronics Engineers (IEEE). Known for its technical weight in the scientific community, IEEE offers a vast array of resources through its digital library, IEEE Xplore. This platform houses over five million documents, with a significant portion dedicated to machine learning.

"Transactions on Neural Networks and Learning Systems" and "Transactions on Pattern Analysis and Machine Intelligence" are key IEEE journals for keeping up-to-date with ML research. These publish peer-reviewed, high-quality studies presented in conferences on the latest techniques and applications in the field.

Generally, IEEE is not the best choice for following the latest advancements, as those are more conveniently accessed through preprint platforms like arXiv. The IEEE Xplore serves more as a fact-checker or a place to search for references in a newly submitted paper.

Note that IEEE is a premium resource, and access to some papers may require a subscription. Of course, there are some gray corners of the internet where one can locate PDFs that aren't available via Open Access or databases for which your affiliated institution has a license, but we'll leave it up to you to seek out those sources for yourself.

Other Resources

Obviously, there are way more resources out there that can effectively keep you up to date with the newest sparks in ML. Below is an incomplete list of websites, blogs, and publications that are helpful:

ResearchGate (preprint publication similar to arXiv)

Tricks and Tips to Achieve Quantity Over Quality

Making the most out of an academic paper or a longer article involves knowing how to navigate it effectively. The ability to quickly identify the essential elements of an academic related text, without getting bogged down in details, is a skill that is both crucial and time-saving. Here are some tips to help you efficiently distill information from academic papers:

First Read Through: Scan the Abstract and Figures

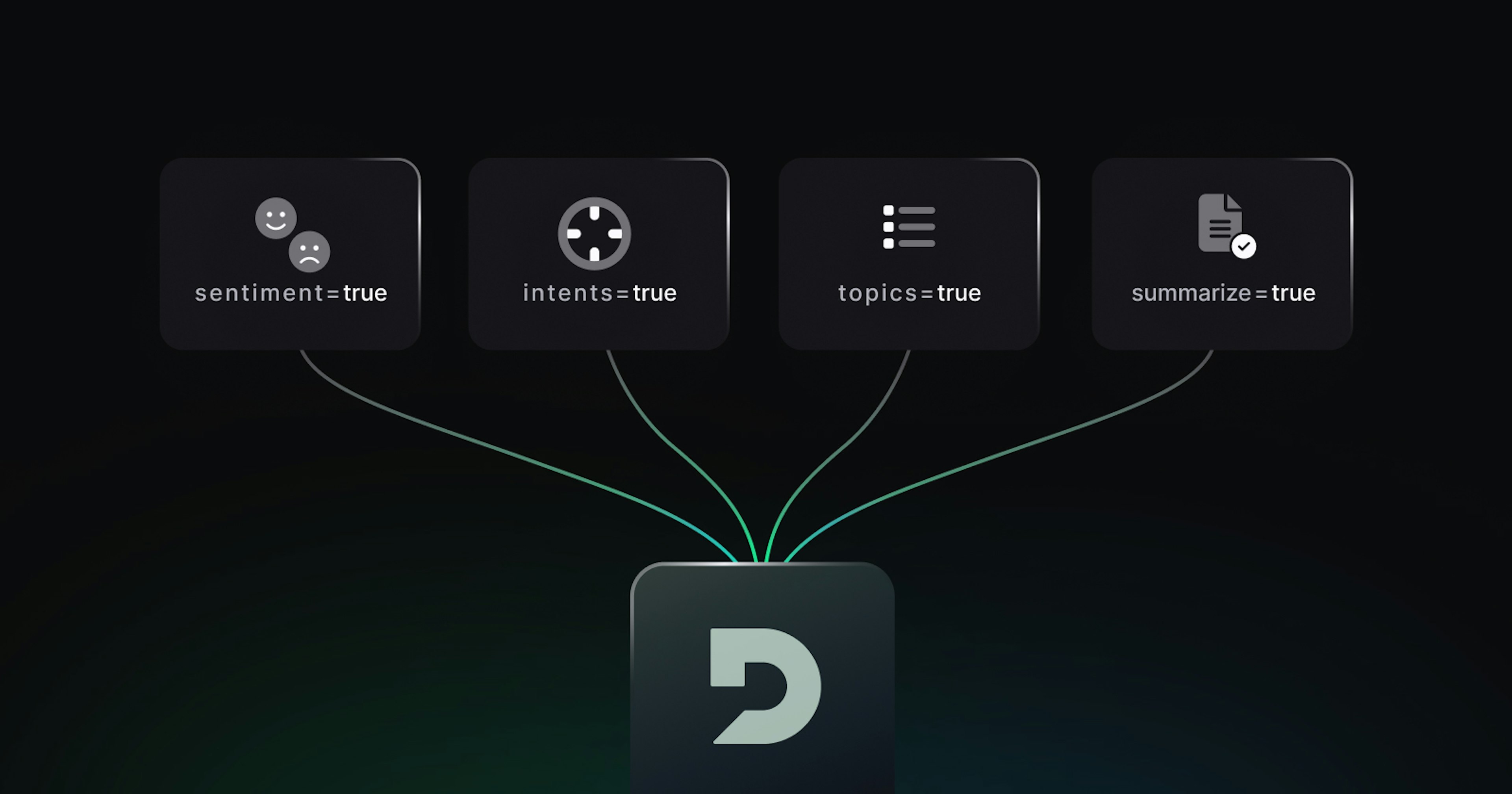

In your initial scan, focus on the abstract (or an overview in the case of an article) and any accompanying figures or diagrams. The abstract offers a concise overview of the paper's main points, results, and conclusions. It can help you grasp the paper's overall theme, the problem it addresses, and the solutions proposed. Figures, on the other hand, provide a visual explanation that complements the text.

Particularly in the field of ML, in most cases, looking at diagrams will give you a clearer picture of the main idea without getting into the nitty gritty details or “prefaces” presented in earlier sections. Even better, a lot of times the diagrams may provide you with a distinct selection of topics needed to fully understand the paper.

Remember, the goal of the first read-through is to understand the "big picture," not the minute details. It should give you a sense of whether delving deeper into the paper will be worth your time.

Leverage AI Tools

Don’t be afraid to use AI tools for help! Using AI tools can significantly streamline your understanding of academic papers. For instance, Talk to PDF is a ChatGPT-like platform for “talking” to PDFs, asking any question about a pdf document that can be provided as links or files.

ChatGPT, without a doubt, can summarize complex content, step through detailed explanations, and even generate questions to guide your learning. Tools like these can augment your reading experience, especially with denser, more technical papers. Although keep in mind that LLMs like ChatGPT are prone to hallucinations and any explanations that were not already mentioned in the original text should be taken with a grain of salt.

Read Actively by Asking Questions

When scrolling through news articles, possibly over-hyped announcements, and paper preprints, it is important to remember and distinguish between those that contribute greatly to a new research direction or just another way of doing things that are already done. Below are 4 questions to consider when reading to avoid wasting time deciphering pages and pages of technical explanations.

How is this paper's content relevant to my learning goals?

Is the researcher asking the right question?

Are these findings reproducible?

What, if anything, does the paper contribute that is new?

These questions can help you evaluate the paper's significance, validity, and potential impact on your learning or research. By keeping these questions in your mind, it can be a significant efficiency boost as some readings may not be worth the time and effort for such a rapidly advancing world.

The pace of advancements in machine learning can make keeping up with the latest literature feel overwhelming. However, with a strategic approach, the right resources, and a dose of curiosity, it's possible to stay informed and inspired. Remember, it's not about knowing everything, but about knowing enough to stay engaged, excited, and ready for the next breakthrough. Happy reading!